OrientationMatchingFourier3d

Estimates the probability of a sample to be locally oriented in a user-defined direction.

Access to parameter description

For an introduction:

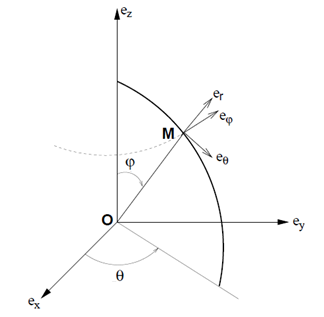

The orientation similarity of a local region with a user-defined orientation is computed by applying the transformation to the inertia matrix, taking into account the changes induced by the basis. Consider the basis change illustrated below.

Figure 1. Basis change from cartesian to spherical coordinate system

One can demonstrate that the transformation of the vector $(V_x, V_y, V_z)$ in the basis $(e_x, e_y, e_z)$ to the vector $(V_r, V_\varphi, V_\theta)$ in the basis $(e_r, e_\varphi, e_\theta)$ is expressed as: $$ \left[ \begin{array}{c} V_r\\ V_\varphi\\ V_\theta\end{array}\right] = \left[ \begin{array}{ccc}; sin (\varphi) \cdot cos (\theta) & sin (\varphi) \cdot sin (\theta) & cos (\varphi)\\ cos (\varphi) \cdot cos (\theta) & cos (\varphi) \cdot sin (\theta) & sin (\varphi)\\ -sin (\theta) & cos (\theta) & 0 \end{array}\right] \left[ \begin{array}{c} V_x\\ V_y\\ V_z\end{array}\right] $$ We denote $P$ this transformation matrix.

Now let $M(x, y, z)$ be the inertia matrix in the basis $(e_x, e_y, e_z)$, the inertia matrix $M(r, \varphi, \theta)$ in the basis $(e_r, e_\varphi, e_\theta)$ is expressed as: $$ M(r, \varphi, \theta) = P \cdot M(x, y, z) \cdot P^{-1}$$

Finally, this algorithm provides two outputs:

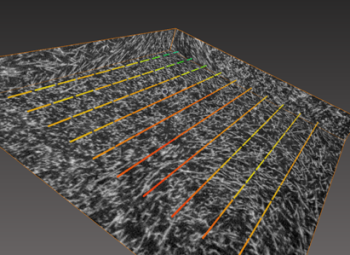

Figure 2. Local orientation matching of a 3D image with user-defined direction

See also

Access to parameter description

For an introduction:

- section Image Analysis

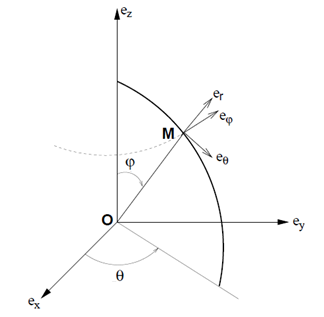

- section Moment And Orientation

The orientation similarity of a local region with a user-defined orientation is computed by applying the transformation to the inertia matrix, taking into account the changes induced by the basis. Consider the basis change illustrated below.

Figure 1. Basis change from cartesian to spherical coordinate system

One can demonstrate that the transformation of the vector $(V_x, V_y, V_z)$ in the basis $(e_x, e_y, e_z)$ to the vector $(V_r, V_\varphi, V_\theta)$ in the basis $(e_r, e_\varphi, e_\theta)$ is expressed as: $$ \left[ \begin{array}{c} V_r\\ V_\varphi\\ V_\theta\end{array}\right] = \left[ \begin{array}{ccc}; sin (\varphi) \cdot cos (\theta) & sin (\varphi) \cdot sin (\theta) & cos (\varphi)\\ cos (\varphi) \cdot cos (\theta) & cos (\varphi) \cdot sin (\theta) & sin (\varphi)\\ -sin (\theta) & cos (\theta) & 0 \end{array}\right] \left[ \begin{array}{c} V_x\\ V_y\\ V_z\end{array}\right] $$ We denote $P$ this transformation matrix.

Now let $M(x, y, z)$ be the inertia matrix in the basis $(e_x, e_y, e_z)$, the inertia matrix $M(r, \varphi, \theta)$ in the basis $(e_r, e_\varphi, e_\theta)$ is expressed as: $$ M(r, \varphi, \theta) = P \cdot M(x, y, z) \cdot P^{-1}$$

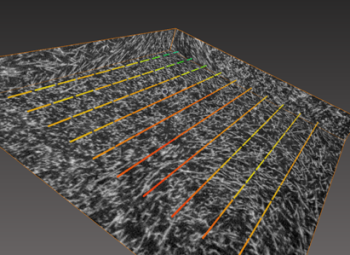

Finally, this algorithm provides two outputs:

- An image that, for each block, shows its similarity with the input orientation, equal to the confidence of the OrientationMapFourier3d algorithm multiplied by 100

- A measurement object that, for each block, provides all computed features (confidence, moments, eigenvalues, ...)

Figure 2. Local orientation matching of a 3D image with user-defined direction

Function Syntax

This function returns a OrientationMatchingFourier3dOutput structure containing the outputImage and outputMeasurement output parameters.

// Output structure.

struct OrientationMatchingFourier3dOutput

{

std::shared_ptr< iolink::ImageView > outputImage;

LocalOrientation3dMsr::Ptr outputMeasurement;

};

// Function prototype.

OrientationMatchingFourier3dOutput

orientationMatchingFourier3d( std::shared_ptr< iolink::ImageView > inputImage,

int32_t blockSize,

OrientationMatchingFourier3d::BlockOverlap blockOverlap,

int32_t minThreshold,

int32_t maxThreshold,

double phiAngle,

double thetaAngle,

std::shared_ptr< iolink::ImageView > outputImage = NULL,

LocalOrientation3dMsr::Ptr outputMeasurement = NULL );

This function returns a tuple containing the output_image and output_measurement output parameters.

// Function prototype.

orientation_matching_fourier_3d( input_image,

block_size = 64,

block_overlap = OrientationMatchingFourier3d.BlockOverlap.YES,

min_threshold = 100,

max_threshold = 300,

phi_angle = 0,

theta_angle = 0,

output_image = None,

output_measurement = None )

This function returns a OrientationMatchingFourier3dOutput structure containing the outputImage and outputMeasurement output parameters.

/// Output structure of the OrientationMatchingFourier3d function.

public struct OrientationMatchingFourier3dOutput

{

public IOLink.ImageView outputImage;

public LocalOrientation3dMsr outputMeasurement;

};

// Function prototype.

public static OrientationMatchingFourier3dOutput

OrientationMatchingFourier3d( IOLink.ImageView inputImage,

Int32 blockSize = 64,

OrientationMatchingFourier3d.BlockOverlap blockOverlap = ImageDev.OrientationMatchingFourier3d.BlockOverlap.YES,

Int32 minThreshold = 100,

Int32 maxThreshold = 300,

double phiAngle = 0,

double thetaAngle = 0,

IOLink.ImageView outputImage = null,

LocalOrientation3dMsr outputMeasurement = null );

Class Syntax

Parameters

| Class Name | OrientationMatchingFourier3d |

|---|

| Parameter Name | Description | Type | Supported Values | Default Value | |||||

|---|---|---|---|---|---|---|---|---|---|

|

inputImage |

The grayscale input image. | Image | Grayscale | nullptr | ||||

|

blockSize |

The side size in pixels of the cubic blocks. | Int32 | >=1 | 64 | ||||

|

blockOverlap |

The policy for splitting the image into blocks.

|

Enumeration | YES | |||||

|

minThreshold |

The minimum threshold value applied to the input grayscale image. Voxels having an intensity lower than this threshold are not considered by the algorithm. | Int32 | >=1 | 100 | ||||

|

maxThreshold |

The maximum threshold value applied to the input grayscale image. Voxels having an intensity greater than this threshold are not considered by the algorithm. | Int32 | >=1 | 300 | ||||

|

phiAngle |

The polar angle, in degrees, of the analysis orientation. | Float64 | Any value | 0 | ||||

|

thetaAngle |

The azimuthal angle, in degrees, of the analysis orientation. | Float64 | Any value | 0 | ||||

|

outputImage |

The grayscale output image representing sticks oriented in the user-defined direction. Intensities correspond to a similarity score with the input orientation in percent. Image dimensions are forced to the same values as the input. Its type is unsigned 8-bit integer. | Image | nullptr | |||||

|

outputMeasurement |

The output measurement result for each block. | LocalOrientation3dMsr | nullptr | |||||

Object Examples

auto foam = readVipImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "foam.vip" ); OrientationMatchingFourier3d orientationMatchingFourier3dAlgo; orientationMatchingFourier3dAlgo.setInputImage( foam ); orientationMatchingFourier3dAlgo.setBlockSize( 10 ); orientationMatchingFourier3dAlgo.setBlockOverlap( OrientationMatchingFourier3d::BlockOverlap::YES ); orientationMatchingFourier3dAlgo.setMinThreshold( 100 ); orientationMatchingFourier3dAlgo.setMaxThreshold( 300 ); orientationMatchingFourier3dAlgo.setPhiAngle( 0 ); orientationMatchingFourier3dAlgo.setThetaAngle( 0 ); orientationMatchingFourier3dAlgo.execute(); std::cout << "outputImage:" << orientationMatchingFourier3dAlgo.outputImage()->toString(); std::cout << "blockOriginX: " << orientationMatchingFourier3dAlgo.outputMeasurement()->blockOriginX( 0 ) ;

foam = imagedev.read_vip_image(imagedev_data.get_image_path("foam.vip"))

orientation_matching_fourier_3d_algo = imagedev.OrientationMatchingFourier3d()

orientation_matching_fourier_3d_algo.input_image = foam

orientation_matching_fourier_3d_algo.block_size = 10

orientation_matching_fourier_3d_algo.block_overlap = imagedev.OrientationMatchingFourier3d.YES

orientation_matching_fourier_3d_algo.min_threshold = 100

orientation_matching_fourier_3d_algo.max_threshold = 300

orientation_matching_fourier_3d_algo.phi_angle = 0

orientation_matching_fourier_3d_algo.theta_angle = 0

orientation_matching_fourier_3d_algo.execute()

print( "output_image:", str( orientation_matching_fourier_3d_algo.output_image ) );

print(

print("blockOriginX: ", orientation_matching_fourier_3d_algo.output_measurement.block_origin_x( 0 ) ) );

ImageView foam = Data.ReadVipImage( @"Data/images/foam.vip" );

OrientationMatchingFourier3d orientationMatchingFourier3dAlgo = new OrientationMatchingFourier3d

{

inputImage = foam,

blockSize = 10,

blockOverlap = OrientationMatchingFourier3d.BlockOverlap.YES,

minThreshold = 100,

maxThreshold = 300,

phiAngle = 0,

thetaAngle = 0

};

orientationMatchingFourier3dAlgo.Execute();

Console.WriteLine( "outputImage:" + orientationMatchingFourier3dAlgo.outputImage.ToString() );

Console.WriteLine( "blockOriginX: " + orientationMatchingFourier3dAlgo.outputMeasurement.blockOriginX( 0 ) );

Function Examples

auto foam = readVipImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "foam.vip" ); auto result = orientationMatchingFourier3d( foam, 10, OrientationMatchingFourier3d::BlockOverlap::YES, 100, 300, 0, 0 ); std::cout << "outputImage:" << result.outputImage->toString(); std::cout << "blockOriginX: " << result.outputMeasurement->blockOriginX( 0 ) ;

foam = imagedev.read_vip_image(imagedev_data.get_image_path("foam.vip"))

result_output_image, result_output_measurement = imagedev.orientation_matching_fourier_3d( foam, 10, imagedev.OrientationMatchingFourier3d.YES, 100, 300, 0, 0 )

print( "output_image:", str( result_output_image ) );

print( "blockOriginX: ", result_output_measurement.block_origin_x( 0 ) );

ImageView foam = Data.ReadVipImage( @"Data/images/foam.vip" ); Processing.OrientationMatchingFourier3dOutput result = Processing.OrientationMatchingFourier3d( foam, 10, OrientationMatchingFourier3d.BlockOverlap.YES, 100, 300, 0, 0 ); Console.WriteLine( "outputImage:" + result.outputImage.ToString() ); Console.WriteLine( "blockOriginX: " + result.outputMeasurement.blockOriginX( 0 ) );