AffineRegistration

Computes the best transformation for the co-registration of two images, using an iterative optimization algorithm.

Access to parameter description

AffineRegistration computes the best transformation for the co-registration of two images, using an iterative optimization algorithm.

The goal of registration is to find a transformation aligning a moving image onto a fixed image, starting from an initial transformation and by optimizing a similarity criterion between both images.

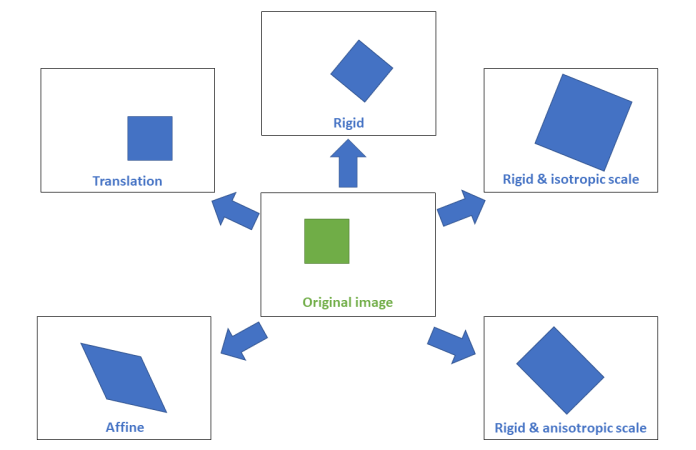

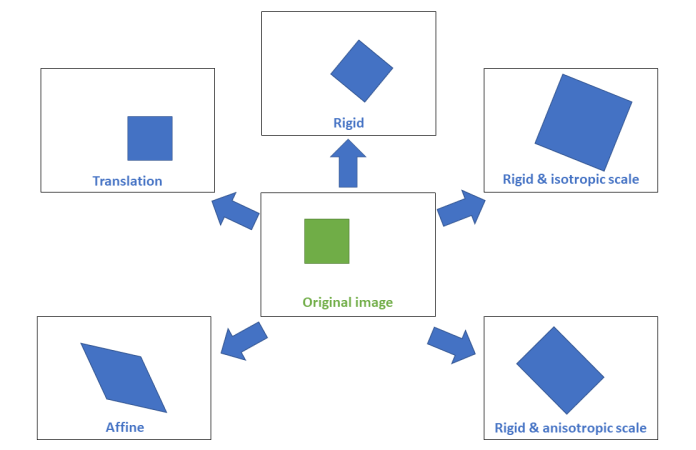

The estimated transformation can be a single translation, rigid (translation and rotation only), rigid with scale factors (isotropic or anisotropic along axis directions), or affine (including shear transformation).

Figure 1. Types of affine transformations

A hierarchical strategy is applied, starting at a coarse resampling of the data set, and proceeding to finer resolutions later on. Different similarity measurements like Euclidean distance, mutual information, and correlation can be selected. After each iteration a similarity score is computed, and the transformation is refined according to an optimizer algorithm. If this score cannot be computed, for instance when the resampling or step parameters are not adapted, it remains at its default value -1000.

The optimizer behavior depends on the optimizerStep parameter which affects the search extent, precision, and computation time. A small optimizerStep is recommended when a pre-alignment has been performed in order to be more precise and avoid driving the transformation at a wrong location.

Different optimization algorithms can be selected with the optimizerType parameter.

Note that none of them can guarantee reaching a global optimal alignment. The registration result is thus dependent on the initial alignment of the dataset, as well as the optimizer steps.

Furthermore, note that whatever the optimizerType chosen, a quasi-Newton optimizer is used on the finest level computed except if there is only one level. This optimizer is best suited for finer resolution levels in order for refining the transformation.

By default, the coarsestResampling and optimizerStep parameters are automatically estimated from the fixed image properties. If moving and fixed images have different resolutions or sizes (for instance, in multi-modality cases), these settings may be inappropriate and cause the registration to fail. In this case, the autoParameterMode parameter should be set to false and both parameters should be manually set to relevant values so that the coarsest resolution level generates a representative volume (not made of too few voxels); the displacement step is precise enough to not skip the searched transformation.

If the two input images have been carefully pre-aligned, it is not recommended to perform the registration at a too low sub-resolution level. It would not only perform useless computations but could also drive the transformation at a wrong location and thus miss the right transformation. Consequently, the following recommendations can be applied in this case:

More information on the callback functions can be found in the Processing interaction section.

References

The Correlation Ratio metric is explained in the following publication:

Access to parameter description

AffineRegistration computes the best transformation for the co-registration of two images, using an iterative optimization algorithm.

The goal of registration is to find a transformation aligning a moving image onto a fixed image, starting from an initial transformation and by optimizing a similarity criterion between both images.

The estimated transformation can be a single translation, rigid (translation and rotation only), rigid with scale factors (isotropic or anisotropic along axis directions), or affine (including shear transformation).

Figure 1. Types of affine transformations

A hierarchical strategy is applied, starting at a coarse resampling of the data set, and proceeding to finer resolutions later on. Different similarity measurements like Euclidean distance, mutual information, and correlation can be selected. After each iteration a similarity score is computed, and the transformation is refined according to an optimizer algorithm. If this score cannot be computed, for instance when the resampling or step parameters are not adapted, it remains at its default value -1000.

The optimizer behavior depends on the optimizerStep parameter which affects the search extent, precision, and computation time. A small optimizerStep is recommended when a pre-alignment has been performed in order to be more precise and avoid driving the transformation at a wrong location.

Different optimization algorithms can be selected with the optimizerType parameter.

Note that none of them can guarantee reaching a global optimal alignment. The registration result is thus dependent on the initial alignment of the dataset, as well as the optimizer steps.

Furthermore, note that whatever the optimizerType chosen, a quasi-Newton optimizer is used on the finest level computed except if there is only one level. This optimizer is best suited for finer resolution levels in order for refining the transformation.

By default, the coarsestResampling and optimizerStep parameters are automatically estimated from the fixed image properties. If moving and fixed images have different resolutions or sizes (for instance, in multi-modality cases), these settings may be inappropriate and cause the registration to fail. In this case, the autoParameterMode parameter should be set to false and both parameters should be manually set to relevant values so that the coarsest resolution level generates a representative volume (not made of too few voxels); the displacement step is precise enough to not skip the searched transformation.

If the two input images have been carefully pre-aligned, it is not recommended to perform the registration at a too low sub-resolution level. It would not only perform useless computations but could also drive the transformation at a wrong location and thus miss the right transformation. Consequently, the following recommendations can be applied in this case:

- Do not use automatic parameters; that is, set autoParameterMode to false.

- Set the coarsest resolution level at half of the original resolution; that is, set the coarsestResampling parameter to (2,2,2).

- Set the optimizerStep parameter with half of the voxel size of the fixed image for finest resolution and the voxel size of fixed image for coarsest resolution.

More information on the callback functions can be found in the Processing interaction section.

References

The Correlation Ratio metric is explained in the following publication:

- A.Roche, G.Malandain, X.Pennec, and N.Ayache. "The Correlation Ratio as a New Similarity Measure for Multimodal Image Registration". INRIA Sophia Antipolis, EPIDAURE project, 1998.

- C.Studholme, D.L.G.Hill, D.J.Hawkes. "An Overlap Invariant Entropy Measure of 3D Medical Image Alignment". Pattern Recognition, vol. 32, pp. 71-86, 1999.

- P.A.Viola. "Alignment by Maximization of Mutual Information". Massachusetts Institute of Technology, Diss., 1995.

- A.Collignon, F.Maes, D.Delaere, D.Vandermeulen, P.Suetens, G.Marchal. "Automated Multi-modality Image Registration Based on Information Theory". IPMI, Dordrecht, Niederlande: Kluwer Academics, pp. 263-274, 1995.

Function Syntax

This function returns the outputTransform output parameter.

// Function prototype.

iolink::Matrix4d

affineRegistration( std::shared_ptr< iolink::ImageView > inputMovingImage,

std::shared_ptr< iolink::ImageView > inputFixedImage,

iolink::Matrix4d initialTransform,

bool autoParameterMode,

iolink::Vector2d optimizerStep,

iolink::Vector2d intensityRangeFixed,

iolink::Vector2d intensityRangeMoving,

iolink::Vector3i32 coarsestResampling,

AffineRegistration::RangeModeFixed rangeModeFixed,

AffineRegistration::RangeModeMoving rangeModeMoving,

AffineRegistration::TransformType transformType,

bool ignoreFinestLevel,

AffineRegistration::MetricType metricType,

double thresholdOutside,

AffineRegistration::OptimizerType optimizerType );

This function returns the outputTransform output parameter.

// Function prototype.

affine_registration( input_moving_image,

input_fixed_image,

initial_transform = _np.identity(4),

auto_parameter_mode = True,

optimizer_step = [4, 0.5],

intensity_range_fixed = [0, 65535],

intensity_range_moving = [0, 65535],

coarsest_resampling = [8, 8, 8],

range_mode_fixed = AffineRegistration.RangeModeFixed.R_MIN_MAX,

range_mode_moving = AffineRegistration.RangeModeMoving.M_MIN_MAX,

transform_type = AffineRegistration.TransformType.RIGID,

ignore_finest_level = False,

metric_type = AffineRegistration.MetricType.CORRELATION,

threshold_outside = 0.2,

optimizer_type = AffineRegistration.OptimizerType.EXTENSIVE_DIRECTION )

This function returns the outputTransform output parameter.

// Function prototype.

public static IOLink.Matrix4d

AffineRegistration( IOLink.ImageView inputMovingImage,

IOLink.ImageView inputFixedImage,

IOLink.Matrix4d initialTransform = null,

bool autoParameterMode = true,

double[] optimizerStep = null,

double[] intensityRangeFixed = null,

double[] intensityRangeMoving = null,

int[] coarsestResampling = null,

AffineRegistration.RangeModeFixed rangeModeFixed = ImageDev.AffineRegistration.RangeModeFixed.R_MIN_MAX,

AffineRegistration.RangeModeMoving rangeModeMoving = ImageDev.AffineRegistration.RangeModeMoving.M_MIN_MAX,

AffineRegistration.TransformType transformType = ImageDev.AffineRegistration.TransformType.RIGID,

bool ignoreFinestLevel = false,

AffineRegistration.MetricType metricType = ImageDev.AffineRegistration.MetricType.CORRELATION,

double thresholdOutside = 0.2,

AffineRegistration.OptimizerType optimizerType = ImageDev.AffineRegistration.OptimizerType.EXTENSIVE_DIRECTION );

Class Syntax

Parameters

| Class Name | AffineRegistration |

|---|

| Parameter Name | Description | Type | Supported Values | Default Value | |||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

inputMovingImage |

The input moving image, also known as the model image. | Image | Grayscale | nullptr | ||||||||||

|

inputFixedImage |

The input fixed image, also known as the reference image. | Image | Grayscale | nullptr | ||||||||||

|

initialTransform |

The initial transformation prealigning both input data sets, represented by a 4x4 matrix. Default value is the identity matrix. | Matrix4d | IDENTITY | |||||||||||

|

transformType |

Selects the type of transformation to estimate. It determines the number of degrees of freedom of the transformation.

|

Enumeration | RIGID | |||||||||||

|

metricType |

The metric type to compute.

|

Enumeration | CORRELATION | |||||||||||

|

optimizerType |

The optimizer strategy.It determines the descent gradient method that is applied to iteratively modify the transformation to produce a better similarity measure.

Extensive direction, best neighbor and line search strategies are best suited for registering two images that are highly misaligned. Quasi newton and conjugated gradients are particularly efficient strategies to refine a transformation after prealignment step.

|

Enumeration | EXTENSIVE_DIRECTION | |||||||||||

|

autoParameterMode |

Determines whether or not the coarsest resampling factor and optimizer steps are automatically computed.

If computed automatically, the optimizerStep, for the coarsest resolution is 1/5 of the size of the fixed image bounding box; for the finest resolution it is 1/6 of the fixed image voxel size. For the coarsestResampling, if the voxels of the fixed image are anisotropic (have a different size in the X, Y, and Z directions), the default resampling rates are around 8 and adapted in order to achieve isotropic voxels on the coarsest level. If the voxels of the fixed image are isotropic (have a the same size in the X, Y, and Z directions), the default resampling rate is computed in order to get at least 30 voxels along each direction. |

Bool | true | |||||||||||

|

optimizerStep |

The step sizes, in world coordinates, used by the optimizer at coarsest and finest scales.

These step sizes refer to translations. For rotations, scalings, and shearings appropriate values are chosen accordingly. The first parameter is applied to the coarsest resolution level, the second to the finest level. Steps at intermediate levels are deduced from them. High step values cover a larger registration area but increase the risk of failure. If the input transformation already provides a reasonable alignment, the steps can be set smaller than the values given by the automatic mode in order to reduce computation time and risk of failure. Assuming a voxel size of (1,1,1) and a coarsestResampling of (8,8,8), these parameters correspond to a displacement of half a voxel for the coarsest and finest level. As it is rarely the case, it is essential to set this parameter in relation with the fixed image voxel size if the automatic mode is disabled. This parameter is ignored if autoParameterMode is set to true. |

Vector2d | >0 | {4.f, 0.5f} | ||||||||||

|

coarsestResampling |

The sub-sampling factor along each axis.

This parameter defines the resampling rate for the coarsest resolution level where registration starts. The resampling rate refers to the fixed data set. For instance, a coarsest resampling factor of 8 means that one voxel at the coarsest level of the fixed image corresponds to 8 voxels at its finest level. If the voxel sizes of the moving and fixed image differ, the resampling rates applied to the moving image are adjusted in order to achieve similar voxel sizes as for the fixed image at a same level. This resampling factor is specified for each dimension of the input volume. This parameter is ignored if autoParameterMode is set to true. |

Vector3i32 | >0 | {8, 8, 8} | ||||||||||

|

ignoreFinestLevel |

Skip the finest level of the pyramid if equal to true. | Bool | false | |||||||||||

|

rangeModeMoving |

The way to define the intensity range to be considered by the algorithm in the fixed image.

|

Enumeration | M_MIN_MAX | |||||||||||

|

intensityRangeMoving |

The range [a,b] of gray values for the moving data set.

It works similarly to its fixed data set counterpart parameter. This parameter is ignored if the rangeModeMoving parameter is set to M_MIN_MAX |

Vector2d | Any value | {0.f, 65535.f} | ||||||||||

|

rangeModeFixed |

The way to define the intensity range to be considered by the algorithm in the moving image.

|

Enumeration | R_MIN_MAX | |||||||||||

|

intensityRangeFixed |

The range [a,b] of gray values for the fixed data set.

With the Mutual information metrics, gray values outside the range are sorted into the first or last histogram bin, respectively. With the Correlation metric, voxels with gray values outside the range are ignored. This parameter is ignored with the Euclidean metric or if the rangeModeFixed parameter is set to R_MIN_MAX. |

Vector2d | Any value | {0.f, 65535.f} | ||||||||||

|

thresholdOutside |

The factor used to define the importance of images coverage.

The final model position can have different overlaps with the reference data set. For example, the model can lie completely inside the reference, or it can share only a small overlapping area. Overlapping area refers to the space that is common to the bounding boxes of both data sets. This parameter lets you use your prior knowledge about the overlap. Its values are between 0 and 1. If you expect that the model and the reference should have a large overlap, then set a large value. Due to numerical errors, it is not recommended to set the threshold value too close to 1. Values around 0.8 should be suitable. Otherwise, if you know that probably only small parts overlap, then use a small value or even zero. The higher the value, the stronger is the decrease of the metric value if the model has parts outside the bounding box of the reference. If an intensity range is selected with the Correlation or Mutual information metrics, the computed metric value can be radically lowered. In this case, the threshold must be thus reduced proportionally to the amount of data represented by the selected range. |

Float64 | >0 | 0.2 | ||||||||||

|

outputTransform |

The output transformation registering both input data sets, represented by a 4x4 matrix. | Matrix4d | IDENTITY | |||||||||||

Object Examples

std::shared_ptr< iolink::ImageView > polystyrene = ioformat::readImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "polystyrene.tif" );

AffineRegistration affineRegistrationAlgo;

affineRegistrationAlgo.setInputMovingImage( polystyrene );

affineRegistrationAlgo.setInputFixedImage( polystyrene );

affineRegistrationAlgo.setInitialTransform( iolink::Matrix4d::identity() );

affineRegistrationAlgo.setAutoParameterMode( true );

affineRegistrationAlgo.setOptimizerStep( {4, 0.5} );

affineRegistrationAlgo.setIntensityRangeFixed( {0, 65535} );

affineRegistrationAlgo.setIntensityRangeMoving( {0, 65535} );

affineRegistrationAlgo.setCoarsestResampling( {8, 8, 8} );

affineRegistrationAlgo.setRangeModeFixed( AffineRegistration::RangeModeFixed::R_MIN_MAX );

affineRegistrationAlgo.setRangeModeMoving( AffineRegistration::RangeModeMoving::M_MIN_MAX );

affineRegistrationAlgo.setTransformType( AffineRegistration::TransformType::RIGID );

affineRegistrationAlgo.setIgnoreFinestLevel( false );

affineRegistrationAlgo.setMetricType( AffineRegistration::MetricType::CORRELATION );

affineRegistrationAlgo.setThresholdOutside( 0.1 );

affineRegistrationAlgo.setOptimizerType( AffineRegistration::OptimizerType::EXTENSIVE_DIRECTION );

affineRegistrationAlgo.execute();

std::cout << "outputTransform:" << affineRegistrationAlgo.outputTransform().toString();

polystyrene = ioformat.read_image(imagedev_data.get_image_path("polystyrene.tif"))

affine_registration_algo = imagedev.AffineRegistration()

affine_registration_algo.input_moving_image = polystyrene

affine_registration_algo.input_fixed_image = polystyrene

affine_registration_algo.initial_transform = np.identity(4)

affine_registration_algo.auto_parameter_mode = True

affine_registration_algo.optimizer_step = [4, 0.5]

affine_registration_algo.intensity_range_fixed = [0, 65535]

affine_registration_algo.intensity_range_moving = [0, 65535]

affine_registration_algo.coarsest_resampling = [8, 8, 8]

affine_registration_algo.range_mode_fixed = imagedev.AffineRegistration.R_MIN_MAX

affine_registration_algo.range_mode_moving = imagedev.AffineRegistration.M_MIN_MAX

affine_registration_algo.transform_type = imagedev.AffineRegistration.RIGID

affine_registration_algo.ignore_finest_level = False

affine_registration_algo.metric_type = imagedev.AffineRegistration.CORRELATION

affine_registration_algo.threshold_outside = 0.1

affine_registration_algo.optimizer_type = imagedev.AffineRegistration.EXTENSIVE_DIRECTION

affine_registration_algo.execute()

print( "output_transform:", str( affine_registration_algo.output_transform ) );

ImageView polystyrene = ViewIO.ReadImage( @"Data/images/polystyrene.tif" );

IOLink.Matrix4d initialTransform = IOLink.Matrix4d.Identity() ;

AffineRegistration affineRegistrationAlgo = new AffineRegistration

{

inputMovingImage = polystyrene,

inputFixedImage = polystyrene,

initialTransform = initialTransform,

autoParameterMode = true,

optimizerStep = new double[]{4, 0.5},

intensityRangeFixed = new double[]{0, 65535},

intensityRangeMoving = new double[]{0, 65535},

coarsestResampling = new int[]{8, 8, 8},

rangeModeFixed = AffineRegistration.RangeModeFixed.R_MIN_MAX,

rangeModeMoving = AffineRegistration.RangeModeMoving.M_MIN_MAX,

transformType = AffineRegistration.TransformType.RIGID,

ignoreFinestLevel = false,

metricType = AffineRegistration.MetricType.CORRELATION,

thresholdOutside = 0.1,

optimizerType = AffineRegistration.OptimizerType.EXTENSIVE_DIRECTION

};

affineRegistrationAlgo.Execute();

Console.WriteLine( "outputTransform:" + affineRegistrationAlgo.outputTransform.ToString() );

Function Examples

std::shared_ptr< iolink::ImageView > polystyrene = ioformat::readImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "polystyrene.tif" );

auto result = affineRegistration( polystyrene, polystyrene, iolink::Matrix4d::identity(), true, {4, 0.5}, {0, 65535}, {0, 65535}, {8, 8, 8}, AffineRegistration::RangeModeFixed::R_MIN_MAX, AffineRegistration::RangeModeMoving::M_MIN_MAX, AffineRegistration::TransformType::RIGID, false, AffineRegistration::MetricType::CORRELATION, 0.1, AffineRegistration::OptimizerType::EXTENSIVE_DIRECTION );

std::cout << "outputTransform:" << result.toString();

polystyrene = ioformat.read_image(imagedev_data.get_image_path("polystyrene.tif"))

result = imagedev.affine_registration( polystyrene, polystyrene, np.identity(4), True, [4, 0.5], [0, 65535], [0, 65535], [8, 8, 8], imagedev.AffineRegistration.R_MIN_MAX, imagedev.AffineRegistration.M_MIN_MAX, imagedev.AffineRegistration.RIGID, False, imagedev.AffineRegistration.CORRELATION, 0.1, imagedev.AffineRegistration.EXTENSIVE_DIRECTION )

print( "output_transform:", str( result ) );

ImageView polystyrene = ViewIO.ReadImage( @"Data/images/polystyrene.tif" );

IOLink.Matrix4d initialTransform = IOLink.Matrix4d.Identity() ;

IOLink.Matrix4d result = Processing.AffineRegistration( polystyrene, polystyrene, initialTransform, true, new double[]{4, 0.5}, new double[]{0, 65535}, new double[]{0, 65535}, new int[]{8, 8, 8}, AffineRegistration.RangeModeFixed.R_MIN_MAX, AffineRegistration.RangeModeMoving.M_MIN_MAX, AffineRegistration.TransformType.RIGID, false, AffineRegistration.MetricType.CORRELATION, 0.1, AffineRegistration.OptimizerType.EXTENSIVE_DIRECTION );

Console.WriteLine( "outputTransform:" + result.ToString() );