Classification

Algorithms partioning an image into a label image by assigning its pixels to a class.

- AutoSegmentation3Phases: Performs an automatic 2-level segmentation of a grayscale image.

- AutoIntensityClassification: Classifies all pixels of a grayscale image using the k-means clustering method.

- SupervisedTextureClassification2d: Performs a segmentation of a two-dimensional grayscale image, based on a texture model automatically built from a training input image.

- SupervisedTextureClassification3d: Performs a segmentation of a three-dimensional grayscale image, based on a texture model automatically built from a training input image.

- TextureClassificationCreate: Creates a new object model for performing a texture classification.

- TextureClassificationTrain: Enriches a texture model by training it on a gray level image that has been labeled.

- TextureClassificationApply: Classifies all pixels of an image using a trained texture model.

Pixel classification techniques allow the segmentation of an image into different regions relative to a set of

attributes.

The attributes used by the classifier to identify the regions can be:

The unsupervised classification methods proposed by ImageDev generate a label image by partioning the input image in a predefined number of classes. Each label of the output image identifies a region having common intensity characteristics.

Two algorithms are available for performing unsupervised intensity classification:

A texture classification workflow is composed of three steps:

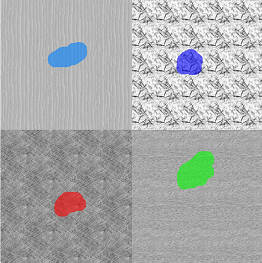

Figure 1. (a) Training image overlaying a grayscale synthetic input image,

(b) tentative of a 4 phases segmentation by gray level k-means classification,

(c) segmentation by texture classification

From co-occurrence matrices, one can derive statistics called Haralick's indicators that are commonly used for describing texture features.

More information about co-occurrence matrices and Haralick's textural features is available in the Cooccurrence2d algorithm documentation.

Data Ranges: The minimum and maximum values of the co-occurrence matrix are extracted from the minimum and maximum intensities of the first image used during the training step. Consequently, for enriching a classification model or segmenting several images with a same model, all images must have a data range consistent with the first training image. If not, the data must be normalized before processing; for example, with the RescaleIntensity or MatchContrast algorithm. A quantization of this range is realized automatically to reduce the memory footprint and computational costs.

Texton: This term refers to a basic texture element whose size and shape can define a set of vectors used for determining a co-occurrence matrix. A texton expresses the orientation and spacing of a repeated texture pattern (for example, stripes).

Two groups of co-occurrence based features are available. Basically the 13 Haralick's texture features are computed for each co-occurrence vector defined by the input texton.

Then the features can be applied in two ways:

Three groups of intensity based statistics are available:

The first group relies on a fast implementation of the first order statistics computation. These features are not extracted from the local histogram, as part of the second group, in order to reduce the computation time.

The radius of analysis must be sufficiently large to model the entire texture. If the radius is too small, the algorithms will fail to classify complex textures. If the value is too large, the algorithm will create strong artifacts at the region borders and will be computationally inefficient. Therefore, a radius range is evaluated by the training step in order to simplify the tuning of the algorithm. This range is defined by a minimum, a maximum, and a step.

Training border management: Note that all the pixels within the analysis radius of a labeled pixel will be considered for the feature extraction, even if they do not have the same label value than the considered pixel. It means that the number of pixels used for training is greater than the number of the labeled pixels.

For a given set of features, a separation power expressed in percent is computed. This value quantifies how this set of features discriminates the learned classes. A measure is rejected if its contribution does not increase the separation power of the classification model enough.

The minSeparationPercentage parameter is a rate, in percent, that indicates the minimum relative increase of the separation power brought by a feature to select it. A higher value will tend to reduce the number of features actually used for classification and thus to lower the computation time of the classification.

For every pixel of the input image, the algorithm extracts the texture features selected in the classification model and computes their Mahalanobis distance to each class center of the model.

Finally, the classification step outputs:

The attributes used by the classifier to identify the regions can be:

- Spectral: each attribute is a channel pixel value from a color or multi-band image.

- Spatial: each attribute is measurement on a given neighborhood of the target pixel. Generally these measurements reveal the local texture surrounding the pixel.

- Unsupervised classification: the input image is automatically partitioned into a predefined number of regions.

- Supervised classification: regions are learned by using a training step on representative samples.

Unsupervised classification

Unsupervised classification methods classify image pixels without performing a training step with labeled data.The unsupervised classification methods proposed by ImageDev generate a label image by partioning the input image in a predefined number of classes. Each label of the output image identifies a region having common intensity characteristics.

Two algorithms are available for performing unsupervised intensity classification:

- AutoSegmentation3Phases : classifies the input image histogram into three classes with the same methods as proposed by the AutoThresholdingBright algorithm.

- AutoIntensityClassification : classifies the input image into a user-defined number of classes with the k-means clustering method.

Supervised texture classification

The supervised texture classification tools aim to perform an image segmentation based on local textural features when classical intensity based segmentation tools are not appropriate.A texture classification workflow is composed of three steps:

- Creation of a texture classification model.

- Training of the texture model on a representative image.

- Application of the texture model to a grayscale image (texture segmentation).

(a) |

(b) |

(c) |

Texture features

Two categories of textural feature descriptors are available:- Features based on co-occurrence matrices

- Features based on intensity statistics

Co-occurrence based features

A co-occurrence matrix expresses the distribution of pairs of pixel values separated by a given offset vector over an image region.From co-occurrence matrices, one can derive statistics called Haralick's indicators that are commonly used for describing texture features.

More information about co-occurrence matrices and Haralick's textural features is available in the Cooccurrence2d algorithm documentation.

Data Ranges: The minimum and maximum values of the co-occurrence matrix are extracted from the minimum and maximum intensities of the first image used during the training step. Consequently, for enriching a classification model or segmenting several images with a same model, all images must have a data range consistent with the first training image. If not, the data must be normalized before processing; for example, with the RescaleIntensity or MatchContrast algorithm. A quantization of this range is realized automatically to reduce the memory footprint and computational costs.

Texton: This term refers to a basic texture element whose size and shape can define a set of vectors used for determining a co-occurrence matrix. A texton expresses the orientation and spacing of a repeated texture pattern (for example, stripes).

Two groups of co-occurrence based features are available. Basically the 13 Haralick's texture features are computed for each co-occurrence vector defined by the input texton.

Then the features can be applied in two ways:

- Directional co-occurrences: each co-occurrence vector of Haralick's features is considered as a separate textural feature. Thus 13 Haralick's features times the number of co-occurrence vectors are computed.

- Rotation invariant co-occurrences: for each Haralick's feature, three statistics over all offset vectors are considered as separate textural features (range, mean, and variance). Thus 39 texture features are computed.

Intensity statistics based features

This category mostly contains features that are described in the Intensity and Histogram categories of the measurement list of individual analysis.Three groups of intensity based statistics are available:

- First order statistics: mean, variance, skewness, kurtosis, and variation.

- Histogram statistics: histogram quantiles, a peak measurement, energy, and entropy.

- Intensity: input image intensities.

The first group relies on a fast implementation of the first order statistics computation. These features are not extracted from the local histogram, as part of the second group, in order to reduce the computation time.

Classification model creation

The texture model creation step consists of initializing a classification object by defining:- The number of classes to determine; that is, the maximum label of the training images.

- The textural feature groups to compute for classifying textures.

- The radius range of the local neighborhood for computing texture features.

The radius of analysis must be sufficiently large to model the entire texture. If the radius is too small, the algorithms will fail to classify complex textures. If the value is too large, the algorithm will create strong artifacts at the region borders and will be computationally inefficient. Therefore, a radius range is evaluated by the training step in order to simplify the tuning of the algorithm. This range is defined by a minimum, a maximum, and a step.

Texture training

This step consists of enriching a classification model by learning on a gray level image that has been labeled. Each label value of the training image identifies a class. Consequently, the same label value can be used in different connected components.Feature extraction

For each labeled pixel of the training image, the algorithm extracts all the texture features belonging to the groups selected in the texture model. These newly extracted features increase the training-set and enrich the model.Training border management: Note that all the pixels within the analysis radius of a labeled pixel will be considered for the feature extraction, even if they do not have the same label value than the considered pixel. It means that the number of pixels used for training is greater than the number of the labeled pixels.

Feature Selection

Once the features are computed on the training image, the classification model is updated and all the features that are not enough discriminating or too much correlated with another feature are rejected.For a given set of features, a separation power expressed in percent is computed. This value quantifies how this set of features discriminates the learned classes. A measure is rejected if its contribution does not increase the separation power of the classification model enough.

The minSeparationPercentage parameter is a rate, in percent, that indicates the minimum relative increase of the separation power brought by a feature to select it. A higher value will tend to reduce the number of features actually used for classification and thus to lower the computation time of the classification.

Classification application

This step classifies all the pixels of an image in accordance with a texture model and generates a new label image where each label corresponds to a texture class.For every pixel of the input image, the algorithm extracts the texture features selected in the classification model and computes their Mahalanobis distance to each class center of the model.

Finally, the classification step outputs:

- A label image where each pixel value corresponds to the identifier of the closest class (its label intensity used at the training step).

- A float image where each pixel value corresponds to a metric representing an uncertainty score (uncertainty map). By default, this metric is the distance to the closest class but it also can be a score taking into account the level of ambiguity of the classification or the distance to each learned class.