TensorVoting2d

Strengthens local features in a two-dimensional image according to their consistency with a model of smooth curves.

Access to parameter description

The purpose of this algorithm is to fill gaps inside features appearing in a score image along their orientations given by a vector image. The structural information contained in the feature image is propagated through a voting field.

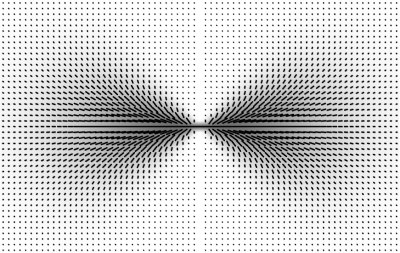

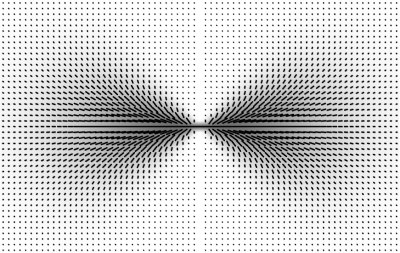

The voting field used assumes that the best connection between two points with one orientation imposes a circular arc that decays with the radius according to a Gaussian function.

The voting field size (that is, the maximum length of gaps to fill) is defined by a scale parameter.

This voting field can be illustrated on the unit sphere:

Figure 1. Example of a stick voting field

Different outputs can be computed by this filter and can be enabled or not by a selection parameter.

Only the outputs necessary to the application have to be selected in order to minimize memory and computation time usage.

This filter can provide a spectral image output where each channel represents a tensor component set in the following order $I_{xx}$, $I_{xy}$, $I_{yy}$.

The saliency, ballness, and orientation vector images can be extracted from this tensor image. These metrics are defined as follows:

This method is referenced by Franken and van Almsick publication:

E.Franken, M.van Almsick, P.Rongen, L.Florack, B.ter Haar Romeny. "An efficient method for tensor voting using steerable filters". Computer Vision - ECCV 2006, Springer, pp. 228-240, 2006.

See also

Access to parameter description

The purpose of this algorithm is to fill gaps inside features appearing in a score image along their orientations given by a vector image. The structural information contained in the feature image is propagated through a voting field.

The voting field used assumes that the best connection between two points with one orientation imposes a circular arc that decays with the radius according to a Gaussian function.

The voting field size (that is, the maximum length of gaps to fill) is defined by a scale parameter.

This voting field can be illustrated on the unit sphere:

Figure 1. Example of a stick voting field

Different outputs can be computed by this filter and can be enabled or not by a selection parameter.

Only the outputs necessary to the application have to be selected in order to minimize memory and computation time usage.

This filter can provide a spectral image output where each channel represents a tensor component set in the following order $I_{xx}$, $I_{xy}$, $I_{yy}$.

The saliency, ballness, and orientation vector images can be extracted from this tensor image. These metrics are defined as follows:

- Saliency $=\lambda _{1}-\lambda _{2}$. It can be interpreted as a measure of the orientation certainty or of the feature anisotropy.

- Ballness $=\lambda _{2}$. It can be interpreted as a measure of the orientation uncertainty or of the feature isotropy.

- Orientation $=e_{1}$. It represents the main orientation of the output tensor.

- $\lambda_{1}$ (resp. $\lambda_{2}$) is the largest (resp. smallest) eigenvalue.

- $e_{1}$ is the eigenvector corresponding to the $\lambda_{1}$ eigenvalue.

This method is referenced by Franken and van Almsick publication:

E.Franken, M.van Almsick, P.Rongen, L.Florack, B.ter Haar Romeny. "An efficient method for tensor voting using steerable filters". Computer Vision - ECCV 2006, Springer, pp. 228-240, 2006.

See also

Function Syntax

This function returns a TensorVoting2dOutput structure containing the outputTensorImage, outputVectorImage, outputSaliencyImage and outputBallnessImage output parameters.

// Output structure.

struct TensorVoting2dOutput

{

std::shared_ptr< iolink::ImageView > outputTensorImage;

std::shared_ptr< iolink::ImageView > outputVectorImage;

std::shared_ptr< iolink::ImageView > outputSaliencyImage;

std::shared_ptr< iolink::ImageView > outputBallnessImage;

};

// Function prototype.

TensorVoting2dOutput

tensorVoting2d( std::shared_ptr< iolink::ImageView > inputFeatureImage,

std::shared_ptr< iolink::ImageView > inputVectorImage,

double scale,

int32_t outputSelection,

std::shared_ptr< iolink::ImageView > outputTensorImage = NULL,

std::shared_ptr< iolink::ImageView > outputVectorImage = NULL,

std::shared_ptr< iolink::ImageView > outputSaliencyImage = NULL,

std::shared_ptr< iolink::ImageView > outputBallnessImage = NULL );

This function returns a tuple containing the output_tensor_image, output_vector_image, output_saliency_image and output_ballness_image output parameters.

// Function prototype.

tensor_voting_2d( input_feature_image,

input_vector_image,

scale = 5,

output_selection = 4,

output_tensor_image = None,

output_vector_image = None,

output_saliency_image = None,

output_ballness_image = None )

This function returns a TensorVoting2dOutput structure containing the outputTensorImage, outputVectorImage, outputSaliencyImage and outputBallnessImage output parameters.

/// Output structure of the TensorVoting2d function.

public struct TensorVoting2dOutput

{

public IOLink.ImageView outputTensorImage;

public IOLink.ImageView outputVectorImage;

public IOLink.ImageView outputSaliencyImage;

public IOLink.ImageView outputBallnessImage;

};

// Function prototype.

public static TensorVoting2dOutput

TensorVoting2d( IOLink.ImageView inputFeatureImage,

IOLink.ImageView inputVectorImage,

double scale = 5,

Int32 outputSelection = 4,

IOLink.ImageView outputTensorImage = null,

IOLink.ImageView outputVectorImage = null,

IOLink.ImageView outputSaliencyImage = null,

IOLink.ImageView outputBallnessImage = null );

Class Syntax

Parameters

| Class Name | TensorVoting2d |

|---|

| Parameter Name | Description | Type | Supported Values | Default Value | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

inputFeatureImage |

The feature input image. It can be produced by the EigenvaluesToStructureness2d algorithm.

This image must have only one spectral channel. |

Image | Binary, Label or Grayscale | nullptr | ||||||||

|

inputVectorImage |

The vector input image indicating the orientation of the feature to strengthen. It can be produced by the EigenDecomposition2d algorithm. When used with an edge detector such as an Hessian matrix, the second eigenvector has to be selected since the first eigenvector is orthogonal to the structures.

This image must have the same size as the feature input image and 2 spectral channels. Each vector is used to define an orientation and its norm is ignored. |

Image | Binary, Label, Grayscale or Multispectral | nullptr | ||||||||

|

scale |

The scale parameter acting on the gap length filled by the tensor voting algorithm. It is expressed in pixels. | Float64 | >0 | 5 | ||||||||

|

outputSelection |

The output images to be computed.

|

MultipleChoice | SALIENCY | |||||||||

|

outputTensorImage |

The tensor output corresponding to the strengthened image.

The spatial dimensions, calibration and interpretation of the output image are forced to the same values as the feature input. Type is forced to float. |

Image | nullptr | |||||||||

|

outputVectorImage |

The orientation vector output image, defining the main orientation of the output tensor.

The spatial dimensions, calibration, and interpretation of the output image are forced to the same values as the feature input. The size of the spectral serie component is forced to 2. Each vector defines the main orientation of the output tensor and its norm is equal to the saliency. Type is forced to float. |

Image | nullptr | |||||||||

|

outputSaliencyImage |

The saliency output image representing the anisotropy of the structures.

The spatial dimensions, calibration, and interpretation of the output image are forced to the same values as the feature input. Type is forced to float. |

Image | nullptr | |||||||||

|

outputBallnessImage |

The ballness output image representing the isotropy of the structures.

The spatial dimensions, calibration, and interpretation of the output image are forced to the same values as the feature input. Type is forced to float. |

Image | nullptr | |||||||||

Object Examples

auto retina_structureness = readVipImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "retina_structureness.vip" ); auto retina_orientation_vector = readVipImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "retina_orientation_vector.vip" ); TensorVoting2d tensorVoting2dAlgo; tensorVoting2dAlgo.setInputFeatureImage( retina_structureness ); tensorVoting2dAlgo.setInputVectorImage( retina_orientation_vector ); tensorVoting2dAlgo.setScale( 5 ); tensorVoting2dAlgo.setOutputSelection( 4 ); tensorVoting2dAlgo.execute(); std::cout << "outputTensorImage:" << tensorVoting2dAlgo.outputTensorImage()->toString(); std::cout << "outputVectorImage:" << tensorVoting2dAlgo.outputVectorImage()->toString(); std::cout << "outputSaliencyImage:" << tensorVoting2dAlgo.outputSaliencyImage()->toString(); std::cout << "outputBallnessImage:" << tensorVoting2dAlgo.outputBallnessImage()->toString();

retina_structureness = imagedev.read_vip_image(imagedev_data.get_image_path("retina_structureness.vip"))

retina_orientation_vector = imagedev.read_vip_image(imagedev_data.get_image_path("retina_orientation_vector.vip"))

tensor_voting_2d_algo = imagedev.TensorVoting2d()

tensor_voting_2d_algo.input_feature_image = retina_structureness

tensor_voting_2d_algo.input_vector_image = retina_orientation_vector

tensor_voting_2d_algo.scale = 5

tensor_voting_2d_algo.output_selection = 4

tensor_voting_2d_algo.execute()

print( "output_tensor_image:", str( tensor_voting_2d_algo.output_tensor_image ) )

print( "output_vector_image:", str( tensor_voting_2d_algo.output_vector_image ) )

print( "output_saliency_image:", str( tensor_voting_2d_algo.output_saliency_image ) )

print( "output_ballness_image:", str( tensor_voting_2d_algo.output_ballness_image ) )

ImageView retina_structureness = Data.ReadVipImage( @"Data/images/retina_structureness.vip" );

ImageView retina_orientation_vector = Data.ReadVipImage( @"Data/images/retina_orientation_vector.vip" );

TensorVoting2d tensorVoting2dAlgo = new TensorVoting2d

{

inputFeatureImage = retina_structureness,

inputVectorImage = retina_orientation_vector,

scale = 5,

outputSelection = 4

};

tensorVoting2dAlgo.Execute();

Console.WriteLine( "outputTensorImage:" + tensorVoting2dAlgo.outputTensorImage.ToString() );

Console.WriteLine( "outputVectorImage:" + tensorVoting2dAlgo.outputVectorImage.ToString() );

Console.WriteLine( "outputSaliencyImage:" + tensorVoting2dAlgo.outputSaliencyImage.ToString() );

Console.WriteLine( "outputBallnessImage:" + tensorVoting2dAlgo.outputBallnessImage.ToString() );

Function Examples

auto retina_structureness = readVipImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "retina_structureness.vip" ); auto retina_orientation_vector = readVipImage( std::string( IMAGEDEVDATA_IMAGES_FOLDER ) + "retina_orientation_vector.vip" ); auto result = tensorVoting2d( retina_structureness, retina_orientation_vector, 5, 4 ); std::cout << "outputTensorImage:" << result.outputTensorImage->toString(); std::cout << "outputVectorImage:" << result.outputVectorImage->toString(); std::cout << "outputSaliencyImage:" << result.outputSaliencyImage->toString(); std::cout << "outputBallnessImage:" << result.outputBallnessImage->toString();

retina_structureness = imagedev.read_vip_image(imagedev_data.get_image_path("retina_structureness.vip"))

retina_orientation_vector = imagedev.read_vip_image(imagedev_data.get_image_path("retina_orientation_vector.vip"))

result_output_tensor_image, result_output_vector_image, result_output_saliency_image, result_output_ballness_image = imagedev.tensor_voting_2d( retina_structureness, retina_orientation_vector, 5, 4 )

print( "output_tensor_image:", str( result_output_tensor_image ) )

print( "output_vector_image:", str( result_output_vector_image ) )

print( "output_saliency_image:", str( result_output_saliency_image ) )

print( "output_ballness_image:", str( result_output_ballness_image ) )

ImageView retina_structureness = Data.ReadVipImage( @"Data/images/retina_structureness.vip" ); ImageView retina_orientation_vector = Data.ReadVipImage( @"Data/images/retina_orientation_vector.vip" ); Processing.TensorVoting2dOutput result = Processing.TensorVoting2d( retina_structureness, retina_orientation_vector, 5, 4 ); Console.WriteLine( "outputTensorImage:" + result.outputTensorImage.ToString() ); Console.WriteLine( "outputVectorImage:" + result.outputVectorImage.ToString() ); Console.WriteLine( "outputSaliencyImage:" + result.outputSaliencyImage.ToString() ); Console.WriteLine( "outputBallnessImage:" + result.outputBallnessImage.ToString() );